Conference Overview

Modern warfare is making increasing use of technology-mediated operations such as those involving weaponized remotely piloted aircraft (RPAs), robotic agents and vehicles with autonomous capabilities, and cyber-attack strategies. These types of operations raise many important questions related to the philosophical and ethical implications of technology-mediated warfare as well as the psychological, emotional, and social consequences of these systems both for their users and their victims. The broad purpose of this conference to bring together a core group of scholars, researchers, and stakeholders from Europe and the US to examine several of these important questions in detail. Specifically, the conference will allow participants to convene in Rome for an important and unique discussion technology-enhanced warfare including perspectives from both ethics and social sciences. Including both social science and ethical perspectives in this discussion is important for two reasons. First, social scientists have not often been party to conversations about modern warfare strategies. Second, there is a growing realization among many philosophers that many ethical considerations, including those related to warfare technologies, need to be informed by empirical findings about how people and social groups actually behave.

The conference will take place at the Notre Dame Rome gateway facility (see https://international.nd.edu/global-gateways/rome/) on October 17-18, 2017 in Room 202. Conference hotel is The Lancelot Hotel (https://www.lancelothotel.com/).

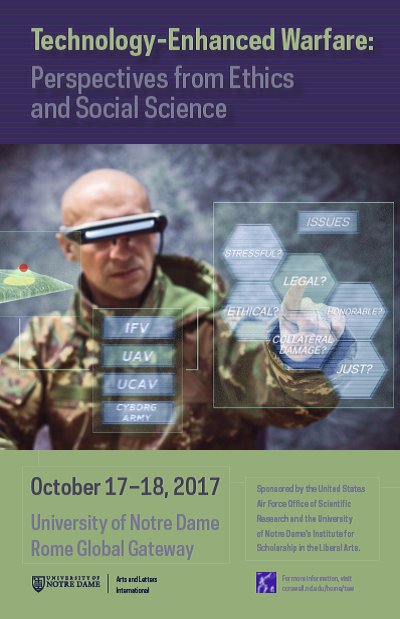

The conference program flyer is available for download here.

Conference Talks & Abstracts

Day 1 Ethics Perspective Talks

Gen. Robert Latiff (Ret.) – Context Remarks – The Importance of Ethics for Technology Enhanced Warfare

Thomas E. Creely – Moderator of Discussions

George Lucas – An ‘Aegis System’ for the Cyber Domain: Automated Intelligent Defense Against Multiple High-speed, High-Intensity Cyber Attacks

Abstract: Big data management in DoD cyber systems is already difficult to manage and nearly impossible to track in real time. Identification of genuine threats and detecting actual attacks against defense systems is simply too difficult for human operators to manage along. AI systems avoid fatigue and reduce the probability of error. They can perform virtually instantaneous assessments of probability and risk, and evaluate the authenticity and potential for harm from each an enormous multiple of sources and attacks detected. The analogy is with the Cold War “Aegis” destroyer missile defense system, designed to respond to and repel an enormous barrage of incoming enemy missiles with a reaction time exceeding the capacity of human operator response. Use of AI in cyber defense and proactive retaliation is unavoidable.

But even apart from the prospects of liability for occasional error (a cyber equivalent of the U.S.S. Vincennes in 1987), how should such systems be deployed? What degree of retaliation should they be equipped to inflict, and of what sort (kinetic, real-world versus cyber equivalent?) Should key industries be equipped with such systems that would permit them to retaliate and repel directly attacks aimed at industrial crippling and sabotage. The military has never before been authorized to permit commercial entities in the private sector to defend themselves directly, but this seems required in the cyber case, as these are the single most vulnerable set of targets.

Fritz Allhoff, Andrew Marquis & Keagan Potts – Corporate Digital Citizenship and the New Cold War

Abstract: If international aggression is economically and politically cheap, then it will become more common. Helen Frowe calls this hypothesis the proliferation problem and discusses it in light of remote warfare. At present, one salient vector of such proliferation is Russia’s social media-based Active Measures (AM) campaigns, which, according to Clint Watts’ recent testimony before the U.S. Senate Select Committee on Intelligence, “shifted aggressively toward U.S. audiences in late 2014 and 2015.” Watts and his fellow witness Dr. Roy Godson agree: Through AM, the Kremlin promotes its interests by sowing economic, political, and cultural chaos in Western democracies like the U.S., weakening their international influence by encouraging the destabilization of the Walzerian ‘common life’ within them.

Don Howard – Virtue Ethics and Technology Enhanced Warfare

Abstract: In 2014, the National Research Council and the National Academy of Sciences released a report on Emerging and Readily Available Technologies and National Security—A Framework for Addressing Ethical, Legal, and Societal Issues. Funded by DARPA, this report includes extensive recommendations for how institutions such as DARPA or other government agencies and private weapons R&D companies can build into their work a serious, sustained, and sophisticated engagement with ethical questions. It is a remarkable document that should be more widely known.

The present paper will summarize the chief recommendations of the report and then ask how such efforts to integrate an engagement with ethics into the everyday work of weapons R&D can be enhanced by the employment of the virtue ethics framework. More specifically, the paper will ask about the civic virtues most appropriate for the well-functioning of communities of weapons developers. The paper will draw heavily upon the theoretical framework outlined in Shannon Vallor’s 2016 book, Technology and the Virtues: A Philosophical Guide to a Future Worth Wanting, while expanding that framework to accommodate reflection on not just individual actors but communities of actors. It is suggested that the moral responsibilities of weapons R&D agencies and corporations do not just supervene on the responsibilities of individual scientists, engineers, managers, and directors, but that such communities of actors must be explicitly engineered to optimize the moral competency of those moral communities.

Matthias Scheutz -- The Need for Ethical and Moral Competence in Autonomous Systems

Abstract: As autonomous systems are gaining in cognitive complexity and are increasingly deployed in both military and civilian domains, the need to ensure their proper autonomous functioning and decision-making is becoming more and more urgent. In particular, autonomous systems with potentially lethal kinetic force, but also systems deployed in the social realm, must be able to conform to legal, ethical, and also moral principles imposed and expected by human societies, for otherwise these systems will cause more harm than good. In this presentation, I will argue for the need to integrate ethical and moral principles deeply into autonomous system architectures to guarantee their ethical behavior. I will also take this opportunity to criticize some of the ongoing research programs in artificial intelligence and robotics (such as deep neural networks) as too dangerous to be employed in autonomous systems. And I will provide a brief overview of the alternative approach we are taking with our cognitive robotic DIARC architecture which has increased levels of introspection and awareness using explicit ethical principles and formally rigorous ethical reasoning to be able to arrive at the provably best possible decision given the system's knowledge and constraints.

John Sullins – Trusting Ethical Decision Making in Autonomous Weapons Systems: what is appropriate?

Abstract: Ethical trust in robotics is the question of whether or not it is ethical to believe a particular robotic system is capable of behaving in a way that the user would be willing to ethically support, even though one might not know exactly how that robotic system makes the decisions to act that it does.

Once we distinguish ethical trust form trust in general, and from the engineering design concerns of technological reliability and safety, we can see that what remains is the trust that these systems will behave in ways that are consistent with, or even superior to, human ethical decision making.

In democratic societies, two important levels of ethical trust occur between the human agents involved in military and security settings. One is the ethical trust granted by the citizens of a particular state in its military or security personnel. This is the trust that they will behave in an ethical and professional manner even when faced with dangerous and stressful situations. When that trust is betrayed, it often results in civil unrest. The second level is the ethical trust that is found between members of military or security forces who must trust that they are receiving ethically justifiable orders and that their subordinates will execute such orders in an ethical and professional manner. Any legitimate military or security force has developed a training system that, more or less, can be ethically trusted and when that trust is betrayed, there are methods to punish those that do.

Autonomous and semi-autonomous systems that are designed to make, or influence, decisions that result in harm complicate both of these relationships of ethical trust. Once we could be somewhat reassured that a fellow citizen who shared at least some of our ethical motivations was making these difficult decisions. Now this has changed and we have to face the fact that a growing number of these decisions are made by a new kind of robotic combatant. A robotic combatant is something with which we can have no democratic relationship or shared values and whose operations can be quite inscrutable to non-experts.

In this paper, we will look at a potential ethical justification for placing trust in robotic autonomous systems in a military and security context. In this way, we might be able to maintain ethical trust in the military and security forces deployed by democratic societies.

Markus Christen -- Criteria for the Ethical Use of (Semi-) Autonomous Systems in Security and Defense

Abstract: Information technology has become a decisive element in modern warfare. Armed forces, but also police, border control and civil protection organizations increasingly rely on robotic systems with growing autonomous capacities. This poses tactical and strategic, but also ethical and legal issues that are of particular relevance when procurement organizations are evaluating such systems for security applications. In order to support the evaluation of such systems from an ethical perspective, this talk presents the result of a study funded by Armasuisse Science+Technology, the center of technology of the Swiss Department of Defense, Civil Protection and Sports, which is responsible for the procurement of security technology.

The goal of the research is twofold: First, it should support the procurement of security/defense sys-tem, e.g. to avoid reputation risks or costly assessments for systems that are unethical and entail political risks (Switzerland is a direct democracy and the procurement of costly defense technology may be-come object of a vote). Second, the research should contribute to the international discussion on the use of autonomous systems in the security context (e.g., with respect to the United Nation Convention on Certain Conventional Weapons).

The study led to an evaluation system for the ethical use of autonomous robotic systems in security applications, whereas the focus is on two types of applications: First, systems whose purpose is not to destroy objects or to harm people (e.g. rescue robots, surveillance systems); although dual-use cannot be excluded. Second, systems that deliberately possess the capacity to harm people or destroy objects – both defensive and offensive, lethal and non-lethal systems. After some general introduction into the topic of this research project, the talk outlines the proposed evaluation system that consists of three steps: deciding about the applicability of the evaluation system (step 1), deciding about the use intention of the robotic system (step 2), and – depending on step 2 – the analysis of the criteria them-selves.

Shannon Vallor – The Troubles We Do Not Avoid: Human Character and the Emerging Technologies of War

Abstract: The emergence of new technologies of war, from the crossbow, to the rifle, to landmines and autonomous military weapons systems, has always raised questions of moral character, both with respect to those virtues of character distinctive to the military profession, and with respect to the virtues of human persons and societies writ large. Yet in the modern era, moral concerns about technologies of war are often crowded out by utilitarian appeals to human security and efficiency, and/or reduced to deontological considerations of international humanitarian law. In this talk I will explore the pressure that emerging technologies of war place on our existing discourse about the ethics of warfare, and the urgent need to reclaim a moral narrative of virtue in both the military and broader human contexts of war. As a framing device for this discussion, I take up Aristotelian, Thomistic and Confucian analyses of the virtues of martial and moral courage, and their ability to enrich our understanding of specific ethical challenges presented by autonomous or semi-autonomous weapons systems. I conclude by showing how effective analysis of these challenges requires consideration of technomoral courage as a necessary and cardinal virtue of contemporary human character.

Day 2 Social Science Perspective Talks

Gen. Robert Latiff (Ret.) – Context Remarks – The Importance of Social Science for Technology Enhanced Warfare

Thomas E. Creely – Moderator of Discussions

Hugh Gusterson – The Justifications and Effects of Remote Killing

Abstract: Given the asymmetry of power and vulnerability, drone operations do not constitute a form of war but of hunting. What are the psychosocial dynamics and the ethics of this kind of remote hunting of humans? As became clear during a controversy over the introduction of a new medal for drone operators, many in the military do not believe that drone operators have been in combat and are presented with opportunities for the display of valor that is a distinguishing mark of the military vocation. On the other hand, there are increasing reports of high levels of PTSD among drone operators which suggests, first, that we may need to reconceptualize combat trauma in the digital age and, second, that killing from a great distance is not as antiseptic as critics of drone operations suggest if this killing is mediated by intimate video imagery. The pyschosocial space of drone operations is one of remote intimacy rather than remoteness pure and simple.

This is not to say that drone operations are a breach of military ethics and international law. In many cases, they are, but this depends on the targeting protocols in the theater of operations and on the legal status of target countries. US attacks on Somalia, Yemen and Waziristan are deeply problematic under international law, given that the U.S. in not in a formal state of war with those countries; thus U.S. allies have largely refused to participate in these attacks. Drone operations in Afghanistan and Iraq, taking place in the context of a wider theater of war, are different. But the defensibility of individual strikes varies with protocols for selecting targets. As is clear from numerous confirmed reports of civilian casualties and mistaken target identification, these protocols are often quite imperfect.

The paper concludes with a suggestion of a legal framework for regulating drone strikes.

Wayne Chappelle – Psychological Health Outcomes of USAF Weapon Strike Predator/Reaper Remotely Piloted Aircraft Operators

Abstract: USAF Weapon Strike Predator/Reaper Remotely Piloted Aircraft (RPA) operators are critical to intelligence, surveillance, reconnaissance, and precision weapon strike missions in support of U.S. and allied nation operations. This constantly evolving form of warfare poses challenges to aviation medicine providers tasked with sustaining the health and wellness of such unique, rapidly growing aircrew. The purpose of this presentation is to provide salient outcome data from empirically based studies and standardized interviews assessing the main sources of occupational stress/conflict, mental health issues, and dilemmas among RPA weapon-strike operators sustaining around-the-clock missions. The main sources of occupationally related psychological health concerns and conflicts are identified, as well as the breadth and depth of psychological reactions that RPA operators routinely experience prior to, during and following weapon strikes. Results are compared and contrasted with airmen operating in more traditional battlefield roles. The results of the study provide unique insights and actionable recommendations for shaping current and future research, as well as the delivery of mental healthcare services for such a unique group of military personnel.

Wil Scott – RPAs in Irregular Conflicts: A Sociological Analysis

Abstract: At the start of the Global War on Terror, the idea of deploying aerial, armed robots would have been, in the words of security analyst, P.W. Singer, “the stuff of Hollywood fantasy.” These days, more than 7,000 popularly called “drones” – in Air Force terminology, Remotely-Piloted Aircraft (RPAs) – are in operation over warzones in Afghanistan, Iraq, Syria and elsewhere. Modern militaries, formidable as they are, do not have do an enviable track record in irregular conflicts of the type found there – ones in which a fully-equipped military with an army, navy, and air force takes on a vastly inferior military force or a “rag-tag” group of violent insurgents having no formal military at all. Discussion of the use of RPAs in such settings typically centers around legal and ethical considerations. This talk augments such concerns by focusing on another dimension – how wise is it to utilize RPAs in irregular warzones? I will consider dimensions of this question by drawing upon a burgeoning literature on irregular warfare from a sociological perspective.

Mike Villano, Dan Moore, Julaine Zenk, Chuck Crowell & Markus Christen – Moral Decision Making in an RPA Simulation Context

Abstract: This talk will provide an overview of our research on moral decision-making during a remotely piloted aircraft (RPA) simulation. This research is important because RPA pilots have been shown to undergo unique forms of occupational and moral stresses which have yet to be properly investigated. Through the use of an innovative and realistic RPA simulation including embedded dilemmas patterned after the well-studied Trolley dilemma, we studied the moral decisions made as well as related physiological and psychological responses using civilian and military student populations. The talk will outline the distinct differences in decision patterns, physiological responses, and moral reasoning used both within and across these populations. Several implications of these findings will be discussed.

Andrew Bickford – Kill-Proofing the Soldier: U.S. Military Biotechnology Programs and the Ethics of Military Performance Enhancement

Abstract: All militaries try to develop a “winning edge” in warfare. More often than not, these attempts focus on new weapons systems and weapons platforms, on new ways of maximizing the offensive capabilities of a military through firepower. These attempts can also involve a focus on the training and development of soldiers, of developing performance enhancements to make soldiers fight better, longer, and smarter than the enemy, and counter human frailty on the battlefield. These concerns and problems have long held the interest of the U.S. military.

My talk examines the bioethics of current US military research projects, programs, and policies designed to improve and enhance soldiers’ combat capabilities and performance and their ability to resist war trauma through the development of new and novel biotechnologies and pharmacology. Specifically, I look at two ideas for “internally-armoring” soldiers: Dr. Marion Sulzberger’s Idiophylactic Soldier of the 1960s, and DARPAs “Inner Armor” program of the mid 2000s. I am interested in how military officials, military medical professionals, and other researchers discuss, imagine, and conceive of ways to make “super soldiers” who can better withstand combat and combat trauma, and how they imagine making war trauma a thing of the past. These projects give us a chance to think about how we design and “make” our soldiers, what we want from them, and ultimately, what we are willing to do in order to achieve desired effects and outcomes.

Catherine Tessier – Shared Human-Robot Control: Frameworks for Embedding Moral and Ethical Values

Abstract: The talk will focus on issues that are raised by the implementation of ethics in machines whose

control is shared between a human operator and the machine itself through its decision functions. As a matter of fact artificial “ethical reasoning”:

- has to be based on ethical frameworks, or (moral) rules, or moral values, that include concepts that may be difficult to instantiate (e.g., what is a "right" action?);

- should implement an adaptive hierarchy of moral values depending on the context;

- should compare and weigh up solutions and arguments that may involve concepts belonging to different fields (e.g., human lives and tangible assets);

- is likely to be biased by the designer's own point of view and by “usual” ways of considering things in a given society; this may be conscious or not;

- is likely to conflict with the operator's own moral values and preferences; in such cases the question of which agent (the human or the machine) holds the "best" decision is raised.

We will start from an ethical dilemma situation involving a robot with a shared control, and give some features of a formal model taking several ethical frameworks into account. Then we will show how the different frameworks respond to the dilemma and to what extent those calculated decisions may influence the operator’s decision. A final discussion will allow us to deal with the various model biases and subjectivity sources that are inherent in the approach.

Matthias Scheutz and Bertram Malle – Moral Robots? Investigating Human Perceptions of Moral Robot Behavior

Abstract: Autonomous social robots are envisioned to be deployed in many different application domains, from health and elder care settings, to combat robots on the battlefield. Critically, these robots will have to have the capability to make decisions on their own to varying degrees, decisions that should ideally conform to moral principles and ethical expectations. While there is currently no autonomous "explicit ethical agent" (in Moore's sense) that can make truly informed ethical decisions, we can still start to empirically investigate human reactions to such agents as if they existed using "Wizard-of-Oz"-style experiments. For it is important to understand not only how to make robots identify and properly process ethical information, but also how such robots would be perceived and accepted by humans. In this presentation, I will provide an overview of our work on evaluating human perceptions of and reactions to "moral robots" in human-robot interaction experiments. Specifically, I will report results from a multi-year sequence of studies in which human subjects were exposed to different types of robotic agents (with human-like and robotic appearance) that may protest human commands for a range of (ethically justified) reasons. Critically, the results suggest that humans are inclined to take robot protest seriously and that robot appearance does not seem to have an influence on human judgments.

Conference Schedule

October 16, 2017, Pre-conference Day

|

10:00 – 17:00 |

Hotel check-in |

|

19:00 |

Welcome reception for speakers (19:00) and dinner (19:30) at The Lancelot Hotel |

October 17, 2017, Conference Day 1: Ethical Perspectives

|

09:00 – 09:10 |

Crowell –Welcome and opening remarks |

|

09:10 – 09:25 |

Latiff – Day 1 Conference Context: The Importance of Ethics |

|

09:30 – 10:15 |

Presentation 1 Lucas – Military Ethics and Cyber Conflict |

|

10:20 – 11:05 |

Presentation 2 Allhoff/Marquis/Potts – Corporate Digital Citizenship and the New Cold War |

|

11:10 – 11:35 |

Discussion – Creely Moderator |

|

11:35 – 11:55 |

Break |

|

12:00 – 12:45 |

Presentation 3 Howard – Virtue Ethics and Technology Enhanced Warfare |

|

12:50 – 13:35 |

Presentation 4 Scheutz – The Need for Ethical and Moral Competence in Autonomous Systems |

|

13:40 – 14:05 |

Discussion – Creely Moderator |

|

14:10 – 15:10 |

Working lunch provided in meeting facility |

|

15:15 – 16:00 |

Presentation 5 Sullins – Designing Ethical Decision Making in Autonomous Weapons Systems: what is appropriate? |

|

16:05 – 16:50 |

Presentation 6 Christen -- Criteria for the Ethical Use of (Semi-) Autonomous Systems in Security and Defense |

|

16:55 – 17:20 |

Discussion – Creely Moderator |

|

17:25 – 17:45 |

Break |

|

17:50 – 18:35 |

Presentation 7 Shannon Vallor – The Troubles We Do Not Avoid: Human Character and the Emerging Technologies of War |

|

18:40 – 19:05 |

General Discussion – Creely Moderator |

|

19:45 |

Conference dinner for speakers at Naumachia (walking distance) |

October 18, 2017, Conference Day 2: Social Science Perspectives

|

09:00 – 09:10 |

Crowell – Beginning remarks |

|

09:10 – 09:25 |

Latiff – Day 2 Conference Context: The Importance of Social Science |

|

09:30 – 10:15 |

Presentation 8 Gusterson – The Justifications and Effects of Remote Killing |

|

10:20 – 11:05 |

Presentation 9 Chappelle – Psychological Health Outcomes of USAF Weapon Strike Predator/Reaper Remotely Piloted Aircraft Operators |

|

11:10 – 11:35 |

Discussion – Creely Moderator |

|

11:35 – 11:55 |

Break |

|

12:00 – 12:45 |

Presentation 10 Scott – RPAs in Irregular Conflicts: A Sociological Analysis |

|

12:50 – 13:35 |

Presentation 11 Villano/Moore/Zenk/Crowell/Christen – Moral Decision Making in an RPA Simulation Context |

|

13:40 – 14:05 |

Discussion – Creely Moderator |

|

14:10 – 15:10 |

Working lunch provided in meeting facility |

|

15:15 – 16:00 |

Presentation 12 Bickford – Kill-Proofing the Soldier: U.S. Military Biotechnology Programs and the Ethics of Military Performance Enhancement |

|

16:05 – 16:50 |

Presentation 13 Tessier – Shared Human-Robot Control: Frameworks for Embedding Moral and Ethical Values |

|

16:55 – 17:20 |

Discussion – Creely Moderator |

|

17:25 – 17:45 |

Break |

|

17:50 – 18:35 |

Presentation 14 Scheutz and Malle – Moral Robots? Investigating Human Perceptions of Moral Robot Behavior |

|

18:40 – 19:05 |

General Discussion – Creely Moderator |

|

19:05 |

Crowell: Concluding remarks |

|

19:45 |

Conference dinner for participants at The Hotel Forum (walking distance) |

Speaker Bios

Francis (Fritz) Allhoff, Department of Philosophy, Western Michigan University

Fritz Allhoff is a Professor in the Department of Philosophy and a Community Professor in the Homer Stryker M.D. School of Medicine at Western Michigan University. Along with a PhD in Philosophy, Fritz graduated, from the University of Michigan Law School and went on to clerk for the Honorable Chief Justice Craig F. Stowers of the Alaska Supreme Court. Since 2015, he has served a Fellow at Stanford Law School’s Center for Law and the Biosciences.

Fritz teaches and writes in the area of applied ethics—especially biomedical ethics and the ethics of war—ethical theory, and philosophy of law. His first monograph was Terrorism, Ticking Time-Bombs, and Torture (University of Chicago Press, 2012; also see here). Fritz is now working on a monograph tentatively entitled Unlimited Necessity: The Moral and Legal Basis for Preferring Lesser Evils. He has edited books on a range of topics, including Binary Bullets: The Ethics of Cyberwarfare (Oxford University Press, in 2016). His popular articles have appeared in Slate and The Atlantic.

Andrew Bickford, Department of Anthropology, Georgetown University

Dr. Andrew Bickford is an Assistant Professor of Anthropology at Georgetown University, and taught at George Mason University from 2005-2017. He received his Ph.D. in Anthropology from Rutgers University in 2002. Dr. Bickford conducts research on war, militarization, masculinity, biotechnology, bioethics, and the state in the United States and Germany. His current research examines biotechnology research in the United States military, and the bioethics of the military’s efforts to develop and make “super soldiers.” Dr. Bickford’s fieldwork in Germany with former East German army officers examined how states “make” and “unmake” soldiers, and the experiences of military elites who had power and lost it.

Dr. Bickford was most recently a 2017 Summer Institute of Museum Anthropology Faculty Fellow at the Smithsonian Institution. Dr. Bickford was a 2014-2015 Residential Fellow at the Woodrow Wilson International Center for Scholars in Washington, D.C., and from 2002-2004, a National Institutes of Mental Health post-doctoral fellow at the University of California, Berkeley School of Public Health. Dr. Bickford has also received grants and fellowships from Fulbright, the Social Science Research Council, the Wenner-Gren Foundation, the Woodrow Wilson International Center for Scholars, and Rutgers University.

Dr. Bickford is the author of Fallen Elites: The Military Other in Post-Unification Germany (Stanford University Press, 2011). He is also the co-author (with the Network of Concerned Anthropologists) of The Counter-Counterinsurgency Manual, or, Notes on Demilitarizing American Society (University of Chicago Press/Prickly Paradigm Press, 2009. He is currently working on a second manuscript based on his current research on biotechnology and bioethics in the US military.

Thomas E. Creely, Ethics and Emerging Military Technology Graduate Certificate Program, United States Naval War College

Thomas E. Creely is Associate Professor of Ethics and Director of the Ethics and Emerging Military Technology Graduate Certificate Program at the United States Naval War College. Also, he is Lead for Leadership and Ethics in Brown University’s Executive Master in Cybersecurity. Tom is a member of The Conference Board Global Business Conduct Council and is Co-Chair of the Association for Practical and Professional Ethics Association Business Ethics Special Interest Group.

Charles R. Crowell, Department of Psychology, University of Notre Dame (Conference Organizer)

Chuck Crowell is a professor in the Department of Psychology. He also directs the Computing and Digital Technologies Program, an interdisciplinary technology-related minor for liberal arts students. Along with his empirical and theoretical work on basic mechanisms of learning and motivation, Prof. Crowell oversees the eMotion and eCognition lab at Notre Dame that is devoted to investigating a spectrum of psychological phenomena including the moral decision-making work using the RPA simulator that will be described in the Day 2 talk. He also will coordinate the validation of a Pilot Assessment Tool at Notre Dame and the United States Air Force Academy in collaboration with Dr. Villano and Maj. Moore.

Wayne Chappelle, Aerospace Medicine Consultation Division, US Air Force Research Laboratory

Wayne Chappelle is the chief of aeromedical operational clinical psychology at the U.S. Air Force School of Aerospace medicine. Dr. Chappelle also serves on the faculty in the Department of Psychiatry at Wright State University. Dr. Chappelle studies mental health issues among military personnel and has conducted several studies related to RPA pilots and crews.

Markus Christen, UZH Digital Society Initiative, University of Zurich

Markus Christen is a Senior Research Fellow at the Centre for Ethics and Managing Director of the UZH Digital Society Initiative of the University of Zurich. He received is MSc in philosophy, physics, mathematics and biology at the University of Berne and his PhD in neuroinformatics at the Federal Institute of Technology in Zurich. Among others, he was Visiting Scholar at the Psychology Department of the University of Notre Dame (IN, USA). Among others, he coordinates the CANVAS Consortium (Creating an Alliance for Value-Sensitive Cybersecurity) on cybersecurity and ethics funded by the European Com-mission, and he is member of the Human Brain Project’s Ethics Advisory Board. His research interests are in empirical ethics, neuroethics, ICT ethics, and data analysis methodologies. He has published in various fields (ethics, complexity science, and neuroscience). Markus is collaborating with Drs. Crowell, Villano and Maj. Moore on the RPA simulation project being conducted at Notre Dame and the United States Air Force Academy.

Hugh Gusterson, Department of Anthropology, George Washington University

Dr. Gusterson’s research focuses on the interdisciplinary study of the conditions under which particular bodies of knowledge are formed, deployed and represented, with special attention to military science and technology. He has written on the cultural world of nuclear weapons scientists and antinuclear activists, on counterinsurgency warfare in the Middle East, and on drone warfare. His books include Nuclear Rites (1996), People of the Bomb (2004) and Drone: Remote Control Warfare (MIT Press, 2016). He has a regular column for the Bulletin of Atomic Scientists and for Sapiens.

Don Howard, Department of Philosophy and Reilly Center, University of Notre Dame

Don Howard is the former director and a Fellow of the University of Notre Dame’s Reilly Center for Science, Technology, and Values and a Professor in the Department of Philosophy. With a first degree in physics (B.Sc., Lyman Briggs College, Michigan State University, 1971), Howard went on to obtain both an M.A. (1973) and a Ph.D. (1979) in philosophy from Boston University, where he specialized in philosophy of physics under the direction of Abner Shimony. A Fellow of the American Physical Society, and Past Chair of APS’s Forum on the History of Physics, Howard is an internationally recognized expert on the history and philosophy of modern physics. Howard has been writing and teaching about the ethics of science and technology for many years. He also serves as the Secretary of the International Society for Military Ethics. Among his current research interests are ethical and legal issues in cyberconflict and cybersecurity as well as the ethics of autonomous systems.

George Lucas, Professor Emeritus, Graduate School of Business and Public Policy, Naval Postgraduate School

Dr. Lucas is an Emeritus Professor of Philosophy and Public Policy at the Graduate School of Business and Public Policy, Naval Postgraduate School. Also, from 2009-2013, he held the Distinguished Chair in Ethics in the Stockdale Center for Ethical Leadership at the U.S. Naval Academy. In addition, Dr. Lucas presently serves as Research Fellow in the French Military Academy, a Lincoln Fellow in Ethics in the Lincoln Center for Applied and Professional Ethics at Arizona State University, the Director of the “Consortium on Emerging Technologies, Military Operations, and National Security,” Editor of the “Military and Defense Ethics” Series published by Ashgate Press and President of the International Society for Military Ethics (ISME). Dr. Lucas’s research interests have centered around the philosophical and ethical issues related to warfare and armed conflict. His latest book, Military Ethics: What Everyone Needs to Know will soon be published by Oxford University Press.

Maj Gen (Ret) Robert H. Latiff, George Mason University & University of Notre Dame

Robert Latiff retired from the U.S. Air Force as a Major General in 2006. He is a private consultant, providing advice on advanced technology matters to corporate and government clients and to universities. He is a member of the board of several high-technology companies. Dr. Latiff was Chief Technology Officer of Science Applications International Corporation’s space and geospatial intelligence business. He is the Chairman of the National Materials and Manufacturing Board and a member of the Air Force Studies Board of the National Academies. His current work includes a study for the Department of Defense on the ethical implications of emerging and dual-use technologies. Dr. Latiff is an active member of the Intelligence Committee of the Armed Forces Communications and Electronics Association (AFCEA). Majr Gen Latiff’s last active duty assignment was at the National Reconnaissance Office where he was Director, Advanced Systems and Technology and Deputy Director for Systems Engineering. He has also served as the Vice Commander, USAF Electronic Systems Center; Commander of the NORAD Cheyenne Mountain Operations Center; and Program Director for the Air Force E-8 JSTARS surveillance aircraft.

Bertram Malle, Department of Cognitive, Linguistic, and Psychological Sciences, Brown University

Bertram F. Malle was trained in psychology, philosophy, and linguistics at the University of Graz, Austria, and received his Ph.D. in Psychology from Stanford University in 1995. Between 1994 and 2008 he was Assistant, Associate, and Full Professor of Psychology at the University of Oregon and served there as Director of the Institute of Cognitive and Decision Sciences from 2001 to 2007. Since September 2008 he is Professor of Psychology in the Department of Cognitive, Linguistic, and Psychological Sciences at Brown University. He is past President of the Society of Philosophy and Psychology. Author of over 70 articles and chapters, he has also co-edited three published volumes, Intentions and intentionality (2001, MIT Press), The evolution of language out of pre-language (2002, Benjamins), and Other minds (2005, Guilford). He authored a monograph on How the mind explains behavior (2004, MIT Press), and his current book project is entitled Social Cognitive Science.

Maj. Dan Moore, Department of Behavioral Sciences, United States Air Force Academy

Daniel Moore is a Major in the U.S. Air Force and an Instructor of Behavioral Sciences and Leadership at the U.S. Air Force Academy. He holds an M.A. in Forensic Psychology from the University of North Dakota and a B.S. in Psychology from Colorado State University. His research interests include decision making, language, influence, and religion. Maj. Moore is collaborating with Drs. Crowell and Villano on the RPA simulation project and on the Pilot Assessment Tool validation project that will be conducted at Notre Dame and the United States Air Force Academy.

Matthias Scheutz, Department of Computer Science, Tufts University

Matthias Scheutz is Professor of Computer and Cognitive Science in the Department of Computer Science at Tufts University and Adjunct Professor in the Department of Psychology. He has over 200 peer-reviewed publications in artificial intelligence, artificial life, agent-based computing, natural language processing, cognitive modeling, robotics, human–robot interaction, and foundations of cognitive science.

Wilbur Scott, Department of Behavioral Sciences, United States Air Force Academy

Dr. Scott received his BA in Sociology/Psychology at St. Johns University and doctorate in Sociology from Louisiana State University. He served on the faculty of the University of Oklahoma in the Department of Sociology from 1975 to 2005 before joining the faculty at the Air Force Academy. Dr. Scott’s areas of specialization include the study of the military, violence, and war and of decision-making in complex socio-cultural environments. He has conducted extensive interviews and collected oral histories from veterans of Vietnam, Iraq, and Afghanistan, and has written extensively about the experience of war and its aftermath. His 1993 book, The Politics of Readjustment: Vietnam Veterans Since the War (Aldine de Gruyter Publishers) was re-published in 2004 under the title, Vietnam Veterans Since the War: The Politics of PTSD, Agent Orange, and the National Memorial (University of Oklahoma Press).

John Sullins, Philosophy Department, Sonoma State University

John Sullins, (Ph.D., Binghamton University (SUNY), 2002) is a full professor at Sonoma State University. His specializations are: philosophy of technology, philosophical issues of artificial intelligence/robotics, cognitive science, philosophy of science, engineering ethics, and computer ethics. His recent research interests are found in the technologies of Robotics, AI and Artificial Life and how they inform traditional philosophical topics on the questions of life and mind as well as its impact on society and the ethical design of successful autonomous machines. His work also crosses into the fields of computer and information technology ethics as well as the use of computer simulations in studying the evolution of morality. His recent publications have focused on artificial agents and their impact on society as well as the ethical design of successful autonomous information technologies including the ethics of the use of robotic weapons systems.

Catherine Tessier, The French Aerospace Lab ONERA

Catherine Tessier is a senior researcher at ONERA, Toulouse, France. She received her doctorate in 1988 and her accreditation as research director in 1999. She is also a part-time professor at ISAE-SUPAERO, Toulouse. Her research focuses on authority-sharing between robot and human, on the implementation of ethical frameworks and moral values into robots and on ethical issues related to the use of robots. Since January

2015 she has been head of EDSYS, the Doctoral School on Systems, University of Toulouse. She is a member of CERNA (The French advisory board for the ethics of Information and Communication Technologies), of COERLE (Inria’s operational ethics committee for the evaluation of legal and ethical risks), as well as University of Toulouse’s CERNI (Ethics committee for non-interventional research). She has coordinated CERNA’s report about the doctoral program « Research ethics and scientific integrity », which is the basis of the doctoral training program on ethics and scientific integrity of the University of Toulouse.

Shannon Vallor, Department of Philosophy, Santa Clara University

Shannon Vallor is the William J. Rewak, S.J. Professor in the Department of Philosophy at Santa Clara University in Silicon Valley, and President of the international Society for Philosophy and Technology (SPT). Her research explores questions related to the philosophy and ethics of emerging technologies, among other issues. Her current research focuses on the impact of emerging technologies, particularly those involving automation, on the moral and intellectual habits, skills and virtues of human beings - human character. Prof. Vallor has a new 2016 book from Oxford University Press: Technology and the Virtues: A Philosophical Guide to a Future Worth Wanting.

Michael Villano, Department of Psychology, University of Notre Dame

Michael Villano is a Research Assistant Professor in the Department of Psychology at the University of Notre Dame. Dr. Villano provides research computing consulting for the department and serves as the Assistant Director of the eMotion and eCognition Laboratory where he conducts research in human robotic interaction and applications of video game and robot technology to such diverse areas as stroke rehabilitation, moral decision-making and autism therapy. Dr. Villano developed the RPA simulation that will be described in his Day 2 talk and has developed a Pilot Assessment Tool for the United States Air Force School of Medicine to be validated at Notre Dame and the US Air Force Academy this fall. Dr. Villano also teaches programming, video game and virtual reality development to both graduate and undergraduate students.